ONNX 與 Python¶

接下來的章節重點介紹用來透過 Python API onnx 提供的函式來建構 ONNX 圖表的主要函式。

一個簡單的範例:線性迴歸¶

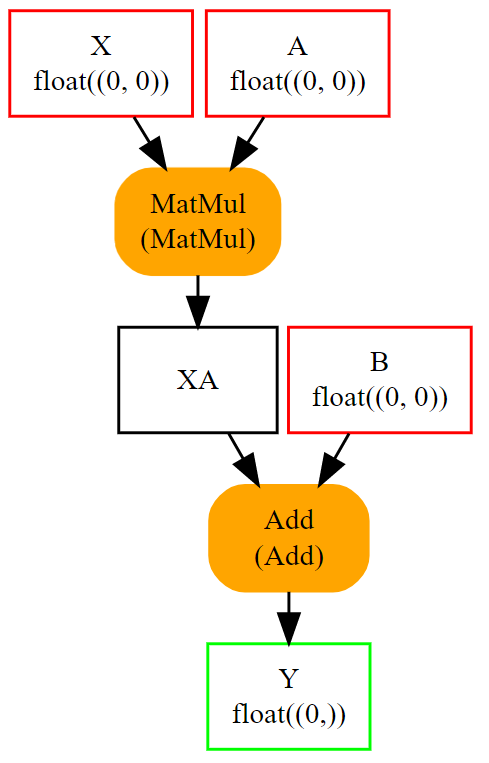

線性迴歸是機器學習中最簡單的模型,由以下表達式 \(Y = XA + B\) 所描述。我們可以將其視為三個變數的函數 \(Y = f(X, A, B)\),分解為 y = Add(MatMul(X, A), B)。這就是我們需要用 ONNX 運算子表示的內容。第一件事是使用 ONNX 運算子 來實作一個函數。ONNX 是強型別的。函數的輸入和輸出都必須定義形狀和類型。也就是說,我們需要四個函數來建立圖表,其中包含 建立函數

make_tensor_value_info:宣告一個變數(輸入或輸出),並指定其形狀和類型make_node:建立一個由運算 (運算子類型)、輸入和輸出所定義的節點make_graph:一個使用前兩個函數建立的物件來建立 ONNX 圖表的函數make_model:最後一個合併圖表和其他中繼資料的函數

在建立的過程中,我們需要為圖表中每個節點的每個輸入、輸出提供一個名稱。圖表的輸入和輸出由 onnx 物件定義,字串則用來參照中間結果。看起來像這樣。

# imports

from onnx import TensorProto

from onnx.helper import (

make_model, make_node, make_graph,

make_tensor_value_info)

from onnx.checker import check_model

# inputs

# 'X' is the name, TensorProto.FLOAT the type, [None, None] the shape

X = make_tensor_value_info('X', TensorProto.FLOAT, [None, None])

A = make_tensor_value_info('A', TensorProto.FLOAT, [None, None])

B = make_tensor_value_info('B', TensorProto.FLOAT, [None, None])

# outputs, the shape is left undefined

Y = make_tensor_value_info('Y', TensorProto.FLOAT, [None])

# nodes

# It creates a node defined by the operator type MatMul,

# 'X', 'A' are the inputs of the node, 'XA' the output.

node1 = make_node('MatMul', ['X', 'A'], ['XA'])

node2 = make_node('Add', ['XA', 'B'], ['Y'])

# from nodes to graph

# the graph is built from the list of nodes, the list of inputs,

# the list of outputs and a name.

graph = make_graph([node1, node2], # nodes

'lr', # a name

[X, A, B], # inputs

[Y]) # outputs

# onnx graph

# there is no metadata in this case.

onnx_model = make_model(graph)

# Let's check the model is consistent,

# this function is described in section

# Checker and Shape Inference.

check_model(onnx_model)

# the work is done, let's display it...

print(onnx_model)

ir_version: 11

graph {

node {

input: "X"

input: "A"

output: "XA"

op_type: "MatMul"

}

node {

input: "XA"

input: "B"

output: "Y"

op_type: "Add"

}

name: "lr"

input {

name: "X"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

input {

name: "A"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

input {

name: "B"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

output {

name: "Y"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

}

}

}

}

}

opset_import {

version: 23

}

空形狀 (None) 表示任何形狀,而定義為 [None, None] 的形狀則表示此物件是具有兩個維度的張量,沒有任何進一步的精確度。也可以藉由檢視圖表中每個物件的欄位來檢查 ONNX 圖表。

from onnx import TensorProto

from onnx.helper import (

make_model, make_node, make_graph,

make_tensor_value_info)

from onnx.checker import check_model

def shape2tuple(shape):

return tuple(getattr(d, 'dim_value', 0) for d in shape.dim)

X = make_tensor_value_info('X', TensorProto.FLOAT, [None, None])

A = make_tensor_value_info('A', TensorProto.FLOAT, [None, None])

B = make_tensor_value_info('B', TensorProto.FLOAT, [None, None])

Y = make_tensor_value_info('Y', TensorProto.FLOAT, [None])

node1 = make_node('MatMul', ['X', 'A'], ['XA'])

node2 = make_node('Add', ['XA', 'B'], ['Y'])

graph = make_graph([node1, node2], 'lr', [X, A, B], [Y])

onnx_model = make_model(graph)

check_model(onnx_model)

# the list of inputs

print('** inputs **')

print(onnx_model.graph.input)

# in a more nicely format

print('** inputs **')

for obj in onnx_model.graph.input:

print("name=%r dtype=%r shape=%r" % (

obj.name, obj.type.tensor_type.elem_type,

shape2tuple(obj.type.tensor_type.shape)))

# the list of outputs

print('** outputs **')

print(onnx_model.graph.output)

# in a more nicely format

print('** outputs **')

for obj in onnx_model.graph.output:

print("name=%r dtype=%r shape=%r" % (

obj.name, obj.type.tensor_type.elem_type,

shape2tuple(obj.type.tensor_type.shape)))

# the list of nodes

print('** nodes **')

print(onnx_model.graph.node)

# in a more nicely format

print('** nodes **')

for node in onnx_model.graph.node:

print("name=%r type=%r input=%r output=%r" % (

node.name, node.op_type, node.input, node.output))

** inputs **

[name: "X"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

, name: "A"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

, name: "B"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

]

** inputs **

name='X' dtype=1 shape=(0, 0)

name='A' dtype=1 shape=(0, 0)

name='B' dtype=1 shape=(0, 0)

** outputs **

[name: "Y"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

}

}

}

]

** outputs **

name='Y' dtype=1 shape=(0,)

** nodes **

[input: "X"

input: "A"

output: "XA"

op_type: "MatMul"

, input: "XA"

input: "B"

output: "Y"

op_type: "Add"

]

** nodes **

name='' type='MatMul' input=['X', 'A'] output=['XA']

name='' type='Add' input=['XA', 'B'] output=['Y']

張量類型為整數 (= 1)。Helper 函數 onnx.helper.tensor_dtype_to_np_dtype() 提供 numpy 的對應類型。

from onnx import TensorProto

from onnx.helper import tensor_dtype_to_np_dtype, tensor_dtype_to_string

np_dtype = tensor_dtype_to_np_dtype(TensorProto.FLOAT)

print(f"The converted numpy dtype for {tensor_dtype_to_string(TensorProto.FLOAT)} is {np_dtype}.")

The converted numpy dtype for TensorProto.FLOAT is float32.

序列化¶

ONNX 是建立在 protobuf 的基礎之上。它新增了必要的定義來描述機器學習模型,而且大部分時間 ONNX 用來序列化或反序列化模型。第一節會解決這個需求。第二節介紹資料的序列化和反序列化,例如張量、稀疏張量...

模型序列化¶

模型需要儲存才能部署。ONNX 以 protobuf 為基礎。它可以將圖表儲存在磁碟上所需的空間降到最低。onnx 中的每個物件 (請參閱 Protos) 都可以使用方法 SerializeToString 進行序列化。這適用於整個模型。

from onnx import TensorProto

from onnx.helper import (

make_model, make_node, make_graph,

make_tensor_value_info)

from onnx.checker import check_model

def shape2tuple(shape):

return tuple(getattr(d, 'dim_value', 0) for d in shape.dim)

X = make_tensor_value_info('X', TensorProto.FLOAT, [None, None])

A = make_tensor_value_info('A', TensorProto.FLOAT, [None, None])

B = make_tensor_value_info('B', TensorProto.FLOAT, [None, None])

Y = make_tensor_value_info('Y', TensorProto.FLOAT, [None])

node1 = make_node('MatMul', ['X', 'A'], ['XA'])

node2 = make_node('Add', ['XA', 'B'], ['Y'])

graph = make_graph([node1, node2], 'lr', [X, A, B], [Y])

onnx_model = make_model(graph)

check_model(onnx_model)

# The serialization

with open("linear_regression.onnx", "wb") as f:

f.write(onnx_model.SerializeToString())

# display

print(onnx_model)

ir_version: 11

graph {

node {

input: "X"

input: "A"

output: "XA"

op_type: "MatMul"

}

node {

input: "XA"

input: "B"

output: "Y"

op_type: "Add"

}

name: "lr"

input {

name: "X"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

input {

name: "A"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

input {

name: "B"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

output {

name: "Y"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

}

}

}

}

}

opset_import {

version: 23

}

可以使用函數 load 還原圖表

from onnx import load

with open("linear_regression.onnx", "rb") as f:

onnx_model = load(f)

# display

print(onnx_model)

ir_version: 11

graph {

node {

input: "X"

input: "A"

output: "XA"

op_type: "MatMul"

}

node {

input: "XA"

input: "B"

output: "Y"

op_type: "Add"

}

name: "lr"

input {

name: "X"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

input {

name: "A"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

input {

name: "B"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

output {

name: "Y"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

}

}

}

}

}

opset_import {

version: 23

}

看起來完全一樣。任何模型都可以使用這種方式進行序列化,除非它們大於 2 GB。protobuf 的大小限制在這個臨界值以下。接下來的章節會說明如何克服這個限制。

資料序列化¶

張量的序列化通常會像下面這樣進行

import numpy

from onnx.numpy_helper import from_array

numpy_tensor = numpy.array([0, 1, 4, 5, 3], dtype=numpy.float32)

print(type(numpy_tensor))

onnx_tensor = from_array(numpy_tensor)

print(type(onnx_tensor))

serialized_tensor = onnx_tensor.SerializeToString()

print(type(serialized_tensor))

with open("saved_tensor.pb", "wb") as f:

f.write(serialized_tensor)

<class 'numpy.ndarray'>

<class 'onnx.onnx_ml_pb2.TensorProto'>

<class 'bytes'>

而反序列化則像這樣

from onnx import TensorProto

from onnx.numpy_helper import to_array

with open("saved_tensor.pb", "rb") as f:

serialized_tensor = f.read()

print(type(serialized_tensor))

onnx_tensor = TensorProto()

onnx_tensor.ParseFromString(serialized_tensor)

print(type(onnx_tensor))

numpy_tensor = to_array(onnx_tensor)

print(numpy_tensor)

<class 'bytes'>

<class 'onnx.onnx_ml_pb2.TensorProto'>

[0. 1. 4. 5. 3.]

相同的 schema 可以用於但不限於 TensorProto

import onnx

import pprint

pprint.pprint([p for p in dir(onnx)

if p.endswith('Proto') and p[0] != '_'])

['AttributeProto',

'FunctionProto',

'GraphProto',

'MapProto',

'ModelProto',

'NodeProto',

'OperatorProto',

'OperatorSetIdProto',

'OperatorSetProto',

'OptionalProto',

'SequenceProto',

'SparseTensorProto',

'StringStringEntryProto',

'TensorProto',

'TensorShapeProto',

'TrainingInfoProto',

'TypeProto',

'ValueInfoProto']

可以使用函數 *load_tensor_from_string* (請參閱 載入 Proto) 來簡化此程式碼。

from onnx import load_tensor_from_string

with open("saved_tensor.pb", "rb") as f:

serialized = f.read()

proto = load_tensor_from_string(serialized)

print(type(proto))

<class 'onnx.onnx_ml_pb2.TensorProto'>

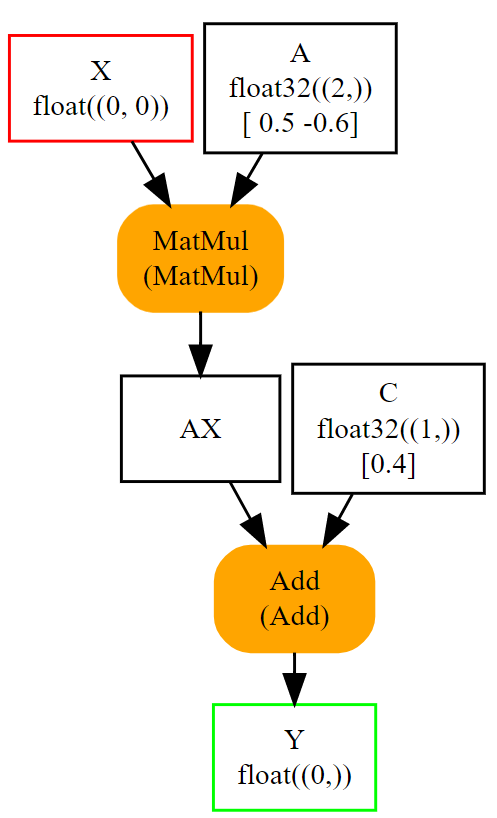

初始化程式、預設值¶

先前的模型假設線性迴歸的係數也是模型的輸入。這不是很方便。它們應該作為常數或初始化器成為模型本身的一部分,以遵循 ONNX 的語義。下一個範例修改前一個範例,將輸入 A 和 B 變為初始化器。此套件實作了兩個函式,可以從 NumPy 轉換為 ONNX,反之亦然(請參閱 array)。

onnx.numpy_helper.to_array:從 ONNX 轉換為 NumPyonnx.numpy_helper.from_array:從 NumPy 轉換為 ONNX

import numpy

from onnx import numpy_helper, TensorProto

from onnx.helper import (

make_model, make_node, make_graph,

make_tensor_value_info)

from onnx.checker import check_model

# initializers

value = numpy.array([0.5, -0.6], dtype=numpy.float32)

A = numpy_helper.from_array(value, name='A')

value = numpy.array([0.4], dtype=numpy.float32)

C = numpy_helper.from_array(value, name='C')

# the part which does not change

X = make_tensor_value_info('X', TensorProto.FLOAT, [None, None])

Y = make_tensor_value_info('Y', TensorProto.FLOAT, [None])

node1 = make_node('MatMul', ['X', 'A'], ['AX'])

node2 = make_node('Add', ['AX', 'C'], ['Y'])

graph = make_graph([node1, node2], 'lr', [X], [Y], [A, C])

onnx_model = make_model(graph)

check_model(onnx_model)

print(onnx_model)

ir_version: 11

graph {

node {

input: "X"

input: "A"

output: "AX"

op_type: "MatMul"

}

node {

input: "AX"

input: "C"

output: "Y"

op_type: "Add"

}

name: "lr"

initializer {

dims: 2

data_type: 1

name: "A"

raw_data: "\000\000\000?\232\231\031\277"

}

initializer {

dims: 1

data_type: 1

name: "C"

raw_data: "\315\314\314>"

}

input {

name: "X"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

output {

name: "Y"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

}

}

}

}

}

opset_import {

version: 23

}

同樣,可以瀏覽 ONNX 結構以檢查初始化器的外觀。

import numpy

from onnx import numpy_helper, TensorProto

from onnx.helper import (

make_model, make_node, make_graph,

make_tensor_value_info)

from onnx.checker import check_model

# initializers

value = numpy.array([0.5, -0.6], dtype=numpy.float32)

A = numpy_helper.from_array(value, name='A')

value = numpy.array([0.4], dtype=numpy.float32)

C = numpy_helper.from_array(value, name='C')

# the part which does not change

X = make_tensor_value_info('X', TensorProto.FLOAT, [None, None])

Y = make_tensor_value_info('Y', TensorProto.FLOAT, [None])

node1 = make_node('MatMul', ['X', 'A'], ['AX'])

node2 = make_node('Add', ['AX', 'C'], ['Y'])

graph = make_graph([node1, node2], 'lr', [X], [Y], [A, C])

onnx_model = make_model(graph)

check_model(onnx_model)

print('** initializer **')

for init in onnx_model.graph.initializer:

print(init)

** initializer **

dims: 2

data_type: 1

name: "A"

raw_data: "\000\000\000?\232\231\031\277"

dims: 1

data_type: 1

name: "C"

raw_data: "\315\314\314>"

類型也定義為整數,其含義相同。在第二個範例中,只剩下一個輸入。輸入 A 和 B 已被移除。它們可以保留。在這種情況下,它們是可選的:每個與輸入具有相同名稱的初始化器都被視為預設值。如果沒有給定輸入,則會取代輸入。

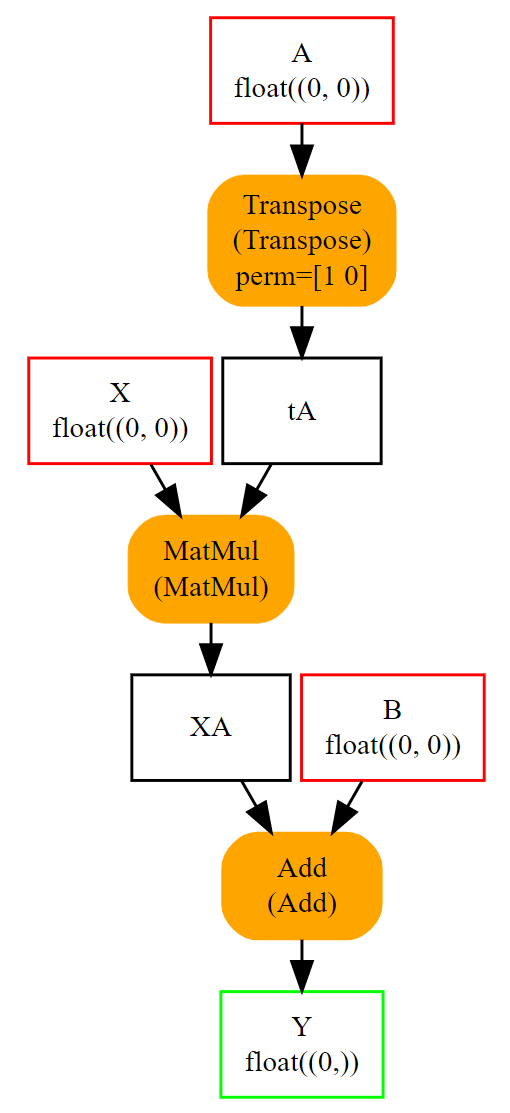

屬性¶

有些運算子需要屬性,例如 Transpose 運算子。讓我們建構表示式 \(y = XA' + B\) 或 y = Add(MatMul(X, Transpose(A)) + B) 的圖形。Transpose 需要一個屬性來定義軸的排列:perm=[1, 0]。它在函式 make_node 中以具名屬性的方式加入。

from onnx import TensorProto

from onnx.helper import (

make_model, make_node, make_graph,

make_tensor_value_info)

from onnx.checker import check_model

# unchanged

X = make_tensor_value_info('X', TensorProto.FLOAT, [None, None])

A = make_tensor_value_info('A', TensorProto.FLOAT, [None, None])

B = make_tensor_value_info('B', TensorProto.FLOAT, [None, None])

Y = make_tensor_value_info('Y', TensorProto.FLOAT, [None])

# added

node_transpose = make_node('Transpose', ['A'], ['tA'], perm=[1, 0])

# unchanged except A is replaced by tA

node1 = make_node('MatMul', ['X', 'tA'], ['XA'])

node2 = make_node('Add', ['XA', 'B'], ['Y'])

# node_transpose is added to the list

graph = make_graph([node_transpose, node1, node2],

'lr', [X, A, B], [Y])

onnx_model = make_model(graph)

check_model(onnx_model)

# the work is done, let's display it...

print(onnx_model)

ir_version: 11

graph {

node {

input: "A"

output: "tA"

op_type: "Transpose"

attribute {

name: "perm"

ints: 1

ints: 0

type: INTS

}

}

node {

input: "X"

input: "tA"

output: "XA"

op_type: "MatMul"

}

node {

input: "XA"

input: "B"

output: "Y"

op_type: "Add"

}

name: "lr"

input {

name: "X"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

input {

name: "A"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

input {

name: "B"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

output {

name: "Y"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

}

}

}

}

}

opset_import {

version: 23

}

完整的 make 函式清單如下。其中許多函式在 make function 一節中說明。

import onnx

import pprint

pprint.pprint([k for k in dir(onnx.helper)

if k.startswith('make')])

['make_attribute',

'make_attribute_ref',

'make_empty_tensor_value_info',

'make_function',

'make_graph',

'make_map',

'make_map_type_proto',

'make_model',

'make_model_gen_version',

'make_node',

'make_operatorsetid',

'make_opsetid',

'make_optional',

'make_optional_type_proto',

'make_sequence',

'make_sequence_type_proto',

'make_sparse_tensor',

'make_sparse_tensor_type_proto',

'make_sparse_tensor_value_info',

'make_tensor',

'make_tensor_sequence_value_info',

'make_tensor_type_proto',

'make_tensor_value_info',

'make_training_info',

'make_value_info']

Opset 和 metadata¶

讓我們載入先前建立的 ONNX 檔案,並檢查它具有何種 metadata。

from onnx import load

with open("linear_regression.onnx", "rb") as f:

onnx_model = load(f)

for field in ['doc_string', 'domain', 'functions',

'ir_version', 'metadata_props', 'model_version',

'opset_import', 'producer_name', 'producer_version',

'training_info']:

print(field, getattr(onnx_model, field))

doc_string

domain

functions []

ir_version 11

metadata_props []

model_version 0

opset_import [version: 23

]

producer_name

producer_version

training_info []

它們大多數是空的,因為在建立 ONNX 圖形時沒有填入。其中兩個有值

from onnx import load

with open("linear_regression.onnx", "rb") as f:

onnx_model = load(f)

print("ir_version:", onnx_model.ir_version)

for opset in onnx_model.opset_import:

print("opset domain=%r version=%r" % (opset.domain, opset.version))

ir_version: 11

opset domain='' version=23

IR 定義了 ONNX 語言的版本。Opset 定義了正在使用的運算子的版本。在沒有任何精確度的情況下,ONNX 使用來自已安裝套件的最新可用版本。也可以使用另一個版本。

from onnx import load

with open("linear_regression.onnx", "rb") as f:

onnx_model = load(f)

del onnx_model.opset_import[:]

opset = onnx_model.opset_import.add()

opset.domain = ''

opset.version = 14

for opset in onnx_model.opset_import:

print("opset domain=%r version=%r" % (opset.domain, opset.version))

opset domain='' version=14

只要所有運算子都以 ONNX 指定的方式定義,就可以使用任何 opset。運算子 *Reshape* 的版本 5 將形狀定義為輸入,而不是像版本 1 中的屬性。opset 說明描述圖形時遵循的規範。

其他 metadata 可用於儲存任何資訊,儲存有關模型產生方式的資訊,或以版本號碼區分模型的方式。

from onnx import load, helper

with open("linear_regression.onnx", "rb") as f:

onnx_model = load(f)

onnx_model.model_version = 15

onnx_model.producer_name = "something"

onnx_model.producer_version = "some other thing"

onnx_model.doc_string = "documentation about this model"

prop = onnx_model.metadata_props

data = dict(key1="value1", key2="value2")

helper.set_model_props(onnx_model, data)

print(onnx_model)

ir_version: 11

producer_name: "something"

producer_version: "some other thing"

model_version: 15

doc_string: "documentation about this model"

graph {

node {

input: "X"

input: "A"

output: "XA"

op_type: "MatMul"

}

node {

input: "XA"

input: "B"

output: "Y"

op_type: "Add"

}

name: "lr"

input {

name: "X"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

input {

name: "A"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

input {

name: "B"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

output {

name: "Y"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

}

}

}

}

}

opset_import {

version: 23

}

metadata_props {

key: "key1"

value: "value1"

}

metadata_props {

key: "key2"

value: "value2"

}

欄位 training_info 可用於儲存其他圖形。請參閱 training_tool_test.py 以了解其運作方式。

子圖形:測試和迴圈¶

它們通常被歸類為 *控制流程*。通常最好避免使用它們,因為它們不像矩陣運算那麼高效,矩陣運算更快且經過最佳化。

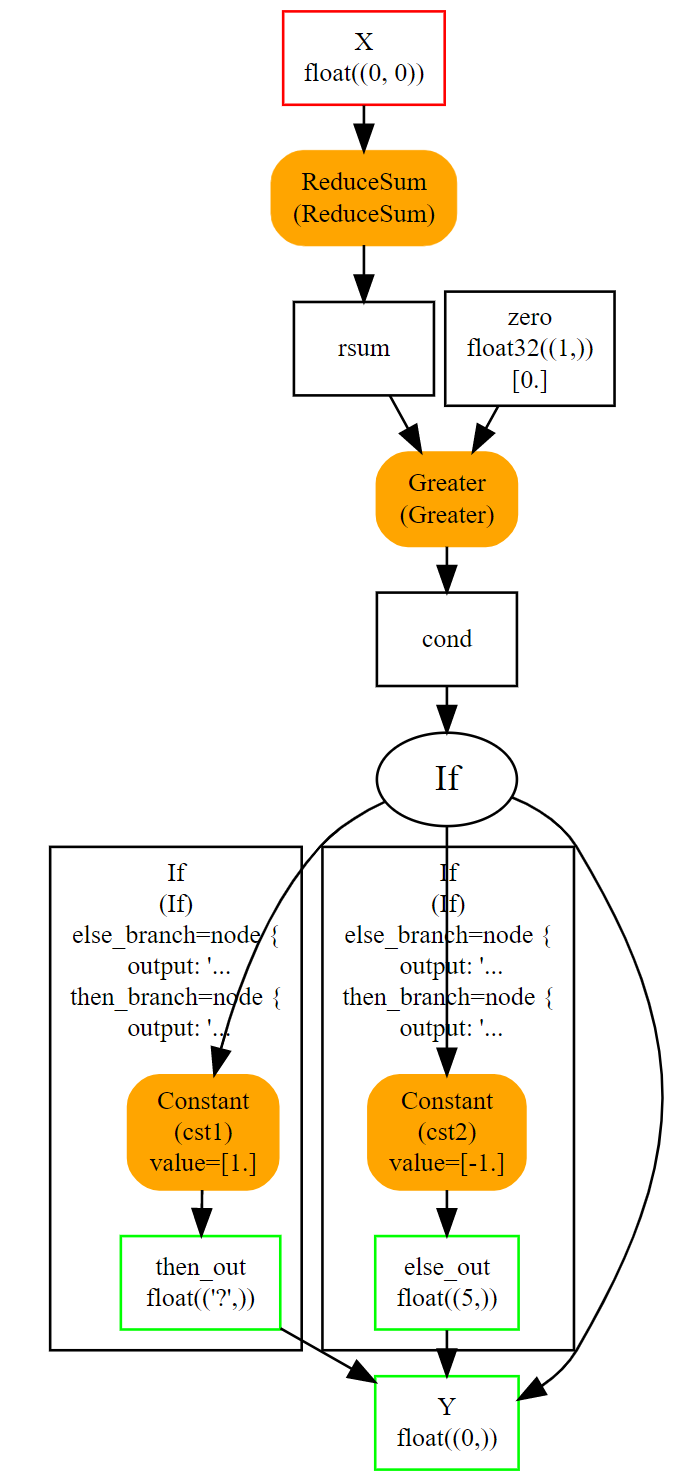

If¶

可以使用運算子 If 來實作測試。它根據一個布林值執行一個子圖形或另一個子圖形。由於函式通常需要批次中許多比較的結果,因此這並不常用。以下範例根據符號計算矩陣中所有浮點數的總和,返回 1 或 -1。

import numpy

import onnx

from onnx.helper import (

make_node, make_graph, make_model, make_tensor_value_info)

from onnx.numpy_helper import from_array

from onnx.checker import check_model

from onnxruntime import InferenceSession

# initializers

value = numpy.array([0], dtype=numpy.float32)

zero = from_array(value, name='zero')

# Same as before, X is the input, Y is the output.

X = make_tensor_value_info('X', onnx.TensorProto.FLOAT, [None, None])

Y = make_tensor_value_info('Y', onnx.TensorProto.FLOAT, [None])

# The node building the condition. The first one

# sum over all axes.

rsum = make_node('ReduceSum', ['X'], ['rsum'])

# The second compares the result to 0.

cond = make_node('Greater', ['rsum', 'zero'], ['cond'])

# Builds the graph is the condition is True.

# Input for then

then_out = make_tensor_value_info(

'then_out', onnx.TensorProto.FLOAT, None)

# The constant to return.

then_cst = from_array(numpy.array([1]).astype(numpy.float32))

# The only node.

then_const_node = make_node(

'Constant', inputs=[],

outputs=['then_out'],

value=then_cst, name='cst1')

# And the graph wrapping these elements.

then_body = make_graph(

[then_const_node], 'then_body', [], [then_out])

# Same process for the else branch.

else_out = make_tensor_value_info(

'else_out', onnx.TensorProto.FLOAT, [5])

else_cst = from_array(numpy.array([-1]).astype(numpy.float32))

else_const_node = make_node(

'Constant', inputs=[],

outputs=['else_out'],

value=else_cst, name='cst2')

else_body = make_graph(

[else_const_node], 'else_body',

[], [else_out])

# Finally the node If taking both graphs as attributes.

if_node = onnx.helper.make_node(

'If', ['cond'], ['Y'],

then_branch=then_body,

else_branch=else_body)

# The final graph.

graph = make_graph([rsum, cond, if_node], 'if', [X], [Y], [zero])

onnx_model = make_model(graph)

check_model(onnx_model)

# Let's freeze the opset.

del onnx_model.opset_import[:]

opset = onnx_model.opset_import.add()

opset.domain = ''

opset.version = 15

onnx_model.ir_version = 8

# Save.

with open("onnx_if_sign.onnx", "wb") as f:

f.write(onnx_model.SerializeToString())

# Let's see the output.

sess = InferenceSession(onnx_model.SerializeToString(),

providers=["CPUExecutionProvider"])

x = numpy.ones((3, 2), dtype=numpy.float32)

res = sess.run(None, {'X': x})

# It works.

print("result", res)

print()

# Some display.

print(onnx_model)

result [array([1.], dtype=float32)]

ir_version: 8

graph {

node {

input: "X"

output: "rsum"

op_type: "ReduceSum"

}

node {

input: "rsum"

input: "zero"

output: "cond"

op_type: "Greater"

}

node {

input: "cond"

output: "Y"

op_type: "If"

attribute {

name: "else_branch"

g {

node {

output: "else_out"

name: "cst2"

op_type: "Constant"

attribute {

name: "value"

t {

dims: 1

data_type: 1

raw_data: "\000\000\200\277"

}

type: TENSOR

}

}

name: "else_body"

output {

name: "else_out"

type {

tensor_type {

elem_type: 1

shape {

dim {

dim_value: 5

}

}

}

}

}

}

type: GRAPH

}

attribute {

name: "then_branch"

g {

node {

output: "then_out"

name: "cst1"

op_type: "Constant"

attribute {

name: "value"

t {

dims: 1

data_type: 1

raw_data: "\000\000\200?"

}

type: TENSOR

}

}

name: "then_body"

output {

name: "then_out"

type {

tensor_type {

elem_type: 1

}

}

}

}

type: GRAPH

}

}

name: "if"

initializer {

dims: 1

data_type: 1

name: "zero"

raw_data: "\000\000\000\000"

}

input {

name: "X"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

output {

name: "Y"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

}

}

}

}

}

opset_import {

domain: ""

version: 15

}

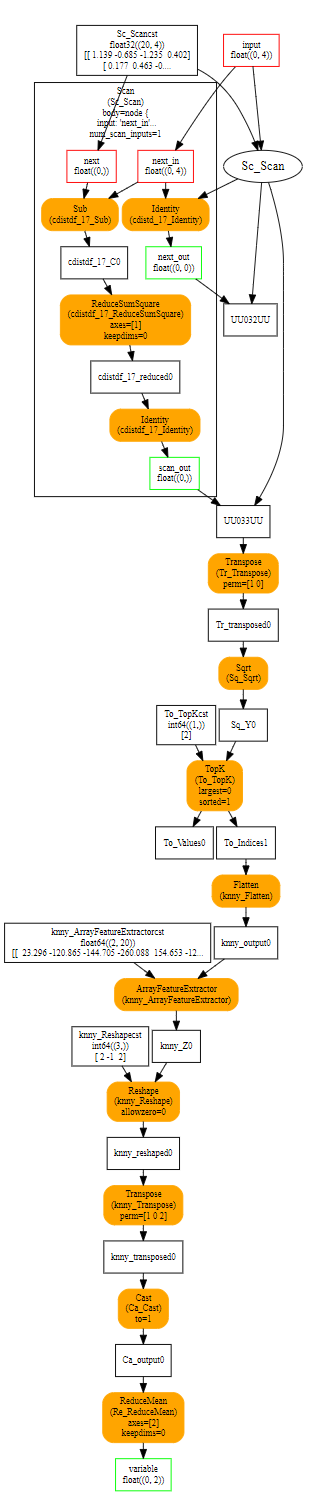

透過以下影像,可以更容易地視覺化整體結構。

else 和 then 分支都很簡單。節點 *If* 甚至可以用節點 *Where* 取代,這樣會更快。當兩個分支都較大,且跳過其中一個分支更有效時,它會變得很有趣。

Scan¶

閱讀規範時,Scan 看起來相當複雜。它可用於迴圈處理張量的一個維度,並將結果儲存在預先配置的張量中。

以下範例實作了迴歸問題的經典最近鄰居。第一步是計算輸入特徵 *X* 和訓練集 *W* 之間的成對距離:\(dist(X,W) = (M_{ij}) = (\norm{X_i - W_j}^2)_{ij}\)。接著是運算子 TopK,它會提取 *k* 個最近鄰居。

import numpy

from onnx import numpy_helper, TensorProto

from onnx.helper import (

make_model, make_node, set_model_props, make_tensor, make_graph,

make_tensor_value_info)

from onnx.checker import check_model

# subgraph

initializers = []

nodes = []

inputs = []

outputs = []

value = make_tensor_value_info('next_in', 1, [None, 4])

inputs.append(value)

value = make_tensor_value_info('next', 1, [None])

inputs.append(value)

value = make_tensor_value_info('next_out', 1, [None, None])

outputs.append(value)

value = make_tensor_value_info('scan_out', 1, [None])

outputs.append(value)

node = make_node(

'Identity', ['next_in'], ['next_out'],

name='cdistd_17_Identity', domain='')

nodes.append(node)

node = make_node(

'Sub', ['next_in', 'next'], ['cdistdf_17_C0'],

name='cdistdf_17_Sub', domain='')

nodes.append(node)

node = make_node(

'ReduceSumSquare', ['cdistdf_17_C0'], ['cdistdf_17_reduced0'],

name='cdistdf_17_ReduceSumSquare', axes=[1], keepdims=0, domain='')

nodes.append(node)

node = make_node(

'Identity', ['cdistdf_17_reduced0'],

['scan_out'], name='cdistdf_17_Identity', domain='')

nodes.append(node)

graph = make_graph(nodes, 'OnnxIdentity',

inputs, outputs, initializers)

# main graph

initializers = []

nodes = []

inputs = []

outputs = []

opsets = {'': 15, 'ai.onnx.ml': 15}

target_opset = 15 # subgraphs

# initializers

list_value = [23.29599822460675, -120.86516699239603, -144.70495899914215, -260.08772982740413,

154.65272105889147, -122.23295157108991, 247.45232560871727, -182.83789715805776,

-132.92727431421793, 147.48710175784703, 88.27761768038069, -14.87785569894749,

111.71487894705504, 301.0518319089629, -29.64235742280055, -113.78493504731911,

-204.41218591022718, 112.26561056133608, 66.04032954135549,

-229.5428380626701, -33.549262642481615, -140.95737409864623, -87.8145187836131,

-90.61397011283958, 57.185488100413366, 56.864151796743855, 77.09054590340892,

-187.72501631246712, -42.779503579806025, -21.642642730674076, -44.58517761667535,

78.56025104939847, -23.92423223842056, 234.9166231927213, -73.73512816431007,

-10.150864499514297, -70.37105466673813, 65.5755688281476, 108.68676290979731, -78.36748960443065]

value = numpy.array(list_value, dtype=numpy.float64).reshape((2, 20))

tensor = numpy_helper.from_array(

value, name='knny_ArrayFeatureExtractorcst')

initializers.append(tensor)

list_value = [1.1394007205963135, -0.6848101019859314, -1.234825849533081, 0.4023416340351105,

0.17742614448070526, 0.46278226375579834, -0.4017809331417084, -1.630198359489441,

-0.5096521973609924, 0.7774903774261475, -0.4380742907524109, -1.2527953386306763,

-1.0485529899597168, 1.950775384902954, -1.420017957687378, -1.7062702178955078,

1.8675580024719238, -0.15135720372200012, -0.9772778749465942, 0.9500884413719177,

-2.5529897212982178, -0.7421650290489197, 0.653618574142456, 0.8644362092018127,

1.5327792167663574, 0.37816253304481506, 1.4693588018417358, 0.154947429895401,

-0.6724604368209839, -1.7262825965881348, -0.35955315828323364, -0.8131462931632996,

-0.8707971572875977, 0.056165341287851334, -0.5788496732711792, -0.3115525245666504,

1.2302906513214111, -0.302302747964859, 1.202379822731018, -0.38732680678367615,

2.269754648208618, -0.18718385696411133, -1.4543657302856445, 0.04575851559638977,

-0.9072983860969543, 0.12898291647434235, 0.05194539576768875, 0.7290905714035034,

1.4940791130065918, -0.8540957570075989, -0.2051582634449005, 0.3130677044391632,

1.764052391052246, 2.2408931255340576, 0.40015721321105957, 0.978738009929657,

0.06651721894741058, -0.3627411723136902, 0.30247190594673157, -0.6343221068382263,

-0.5108051300048828, 0.4283318817615509, -1.18063223361969, -0.02818222902715206,

-1.6138978004455566, 0.38690251111984253, -0.21274028718471527, -0.8954665660858154,

0.7610377073287964, 0.3336743414402008, 0.12167501449584961, 0.44386324286460876,

-0.10321885347366333, 1.4542734622955322, 0.4105985164642334, 0.14404356479644775,

-0.8877857327461243, 0.15634897351264954, -1.980796456336975, -0.34791216254234314]

value = numpy.array(list_value, dtype=numpy.float32).reshape((20, 4))

tensor = numpy_helper.from_array(value, name='Sc_Scancst')

initializers.append(tensor)

value = numpy.array([2], dtype=numpy.int64)

tensor = numpy_helper.from_array(value, name='To_TopKcst')

initializers.append(tensor)

value = numpy.array([2, -1, 2], dtype=numpy.int64)

tensor = numpy_helper.from_array(value, name='knny_Reshapecst')

initializers.append(tensor)

# inputs

value = make_tensor_value_info('input', 1, [None, 4])

inputs.append(value)

# outputs

value = make_tensor_value_info('variable', 1, [None, 2])

outputs.append(value)

# nodes

node = make_node(

'Scan', ['input', 'Sc_Scancst'], ['UU032UU', 'UU033UU'],

name='Sc_Scan', body=graph, num_scan_inputs=1, domain='')

nodes.append(node)

node = make_node(

'Transpose', ['UU033UU'], ['Tr_transposed0'],

name='Tr_Transpose', perm=[1, 0], domain='')

nodes.append(node)

node = make_node(

'Sqrt', ['Tr_transposed0'], ['Sq_Y0'],

name='Sq_Sqrt', domain='')

nodes.append(node)

node = make_node(

'TopK', ['Sq_Y0', 'To_TopKcst'], ['To_Values0', 'To_Indices1'],

name='To_TopK', largest=0, sorted=1, domain='')

nodes.append(node)

node = make_node(

'Flatten', ['To_Indices1'], ['knny_output0'],

name='knny_Flatten', domain='')

nodes.append(node)

node = make_node(

'ArrayFeatureExtractor',

['knny_ArrayFeatureExtractorcst', 'knny_output0'], ['knny_Z0'],

name='knny_ArrayFeatureExtractor', domain='ai.onnx.ml')

nodes.append(node)

node = make_node(

'Reshape', ['knny_Z0', 'knny_Reshapecst'], ['knny_reshaped0'],

name='knny_Reshape', allowzero=0, domain='')

nodes.append(node)

node = make_node(

'Transpose', ['knny_reshaped0'], ['knny_transposed0'],

name='knny_Transpose', perm=[1, 0, 2], domain='')

nodes.append(node)

node = make_node(

'Cast', ['knny_transposed0'], ['Ca_output0'],

name='Ca_Cast', to=TensorProto.FLOAT, domain='')

nodes.append(node)

node = make_node(

'ReduceMean', ['Ca_output0'], ['variable'],

name='Re_ReduceMean', axes=[2], keepdims=0, domain='')

nodes.append(node)

# graph

graph = make_graph(nodes, 'KNN regressor', inputs, outputs, initializers)

# model

onnx_model = make_model(graph)

onnx_model.ir_version = 8

onnx_model.producer_name = 'skl2onnx'

onnx_model.producer_version = ''

onnx_model.domain = 'ai.onnx'

onnx_model.model_version = 0

onnx_model.doc_string = ''

set_model_props(onnx_model, {})

# opsets

del onnx_model.opset_import[:]

for dom, value in opsets.items():

op_set = onnx_model.opset_import.add()

op_set.domain = dom

op_set.version = value

check_model(onnx_model)

with open("knnr.onnx", "wb") as f:

f.write(onnx_model.SerializeToString())

print(onnx_model)

ir_version: 8

producer_name: "skl2onnx"

producer_version: ""

domain: "ai.onnx"

model_version: 0

doc_string: ""

graph {

node {

input: "input"

input: "Sc_Scancst"

output: "UU032UU"

output: "UU033UU"

name: "Sc_Scan"

op_type: "Scan"

attribute {

name: "body"

g {

node {

input: "next_in"

output: "next_out"

name: "cdistd_17_Identity"

op_type: "Identity"

domain: ""

}

node {

input: "next_in"

input: "next"

output: "cdistdf_17_C0"

name: "cdistdf_17_Sub"

op_type: "Sub"

domain: ""

}

node {

input: "cdistdf_17_C0"

output: "cdistdf_17_reduced0"

name: "cdistdf_17_ReduceSumSquare"

op_type: "ReduceSumSquare"

attribute {

name: "axes"

ints: 1

type: INTS

}

attribute {

name: "keepdims"

i: 0

type: INT

}

domain: ""

}

node {

input: "cdistdf_17_reduced0"

output: "scan_out"

name: "cdistdf_17_Identity"

op_type: "Identity"

domain: ""

}

name: "OnnxIdentity"

input {

name: "next_in"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

dim_value: 4

}

}

}

}

}

input {

name: "next"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

}

}

}

}

output {

name: "next_out"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

output {

name: "scan_out"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

}

}

}

}

}

type: GRAPH

}

attribute {

name: "num_scan_inputs"

i: 1

type: INT

}

domain: ""

}

node {

input: "UU033UU"

output: "Tr_transposed0"

name: "Tr_Transpose"

op_type: "Transpose"

attribute {

name: "perm"

ints: 1

ints: 0

type: INTS

}

domain: ""

}

node {

input: "Tr_transposed0"

output: "Sq_Y0"

name: "Sq_Sqrt"

op_type: "Sqrt"

domain: ""

}

node {

input: "Sq_Y0"

input: "To_TopKcst"

output: "To_Values0"

output: "To_Indices1"

name: "To_TopK"

op_type: "TopK"

attribute {

name: "largest"

i: 0

type: INT

}

attribute {

name: "sorted"

i: 1

type: INT

}

domain: ""

}

node {

input: "To_Indices1"

output: "knny_output0"

name: "knny_Flatten"

op_type: "Flatten"

domain: ""

}

node {

input: "knny_ArrayFeatureExtractorcst"

input: "knny_output0"

output: "knny_Z0"

name: "knny_ArrayFeatureExtractor"

op_type: "ArrayFeatureExtractor"

domain: "ai.onnx.ml"

}

node {

input: "knny_Z0"

input: "knny_Reshapecst"

output: "knny_reshaped0"

name: "knny_Reshape"

op_type: "Reshape"

attribute {

name: "allowzero"

i: 0

type: INT

}

domain: ""

}

node {

input: "knny_reshaped0"

output: "knny_transposed0"

name: "knny_Transpose"

op_type: "Transpose"

attribute {

name: "perm"

ints: 1

ints: 0

ints: 2

type: INTS

}

domain: ""

}

node {

input: "knny_transposed0"

output: "Ca_output0"

name: "Ca_Cast"

op_type: "Cast"

attribute {

name: "to"

i: 1

type: INT

}

domain: ""

}

node {

input: "Ca_output0"

output: "variable"

name: "Re_ReduceMean"

op_type: "ReduceMean"

attribute {

name: "axes"

ints: 2

type: INTS

}

attribute {

name: "keepdims"

i: 0

type: INT

}

domain: ""

}

name: "KNN regressor"

initializer {

dims: 2

dims: 20

data_type: 11

name: "knny_ArrayFeatureExtractorcst"

raw_data: ",\&\212\306K7@\333z`\345^7^\300\304\312,\006\217\026b\300Z9dWgAp\300.+F\027\343Tc@\203\330\264\255\350\216^\300\260\022\216sy\356n@\237h\263\r\320\332f\300\224\277.;\254\235`\300\336\370lV\226ob@\261\201\362|\304\021V@c,[Mv\301-\300\322\214\240\223\300\355[@)\036\262M\324\320r@nE;\211q\244=\300\021n5`<r\\300\207\211\201\2400\215i\300H\232p\303\377\020\@\317K[\302\224\202P@&\306\355\355^\261l\300\301/\377<N\306@\300#w\001\317\242\236a\300$fd\023!\364U\300\204\327LIK\247V\300J\211\366\022\276\227L@\262\345\254\206\234nL@f{\013\201\313ES@\234\343hU3wg\300\3370\367\305\306cE\300\336A\347;\204\2445\300f\374\242\031\347JF\300\325\2557'\333\243S@\331\354\345{\232\3547\300\307o)\372T]m@#\005\000W\014oR\300'\025\227\034>M$\300\310\252\022\\277\227Q\300l_\243\036\326dP@\333kk\354\363+[@\223)\036\363\204\227S\300"

}

initializer {

dims: 20

dims: 4

data_type: 1

name: "Sc_Scancst"

raw_data: "\342\327\221?\267O/\277\306\016\236\277\271\377\315>3\2575>\314\361\354>;\266\315\276W\252\320\277\221x\002\277\234\tG?FK\340\276\231[\240\277\3746\206\277\002\263\371?&\303\265\277\020g\332\277$\014\357?b\375\032\276\342.z\277\3778s?/d#\300\207\376=\277\214S'?\261K]?\0342\304?\205\236\301>\363\023\274?\212\252\036>^&,\277\324\366\334\277Z\027\270\276[*P\277\220\354^\277\241\rf=~/\024\277\320\203\237\276*z\235?m\307\232\276\225\347\231?\263O\306\276\251C\021@ \255?\276\250(\272\277Hm;=\265Dh\277\031\024\004>\262\304T=\256\245:?\374=\277?\005\246Z\277\002\025R\276iJ\240>x\314\341?\313j\017@h\341\314>\223\216z?.:\210=6\271\271\276\231\335\232>\357b"\277 \304\002\277QN\333>\365\036\227\277k\336\346\2744\224\316\277\026\030\306>\227\330Y\276L=e\277^\323B?]\327\252>\3000\371=\013B\343>hd\323\275\242%\272?\3709\322>(\200\023>\355Ec\277\362\031 >\275\212\375\277\213!\262\276"

}

initializer {

dims: 1

data_type: 7

name: "To_TopKcst"

raw_data: "\002\000\000\000\000\000\000\000"

}

initializer {

dims: 3

data_type: 7

name: "knny_Reshapecst"

raw_data: "\002\000\000\000\000\000\000\000\377\377\377\377\377\377\377\377\002\000\000\000\000\000\000\000"

}

input {

name: "input"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

dim_value: 4

}

}

}

}

}

output {

name: "variable"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

dim_value: 2

}

}

}

}

}

}

opset_import {

domain: ""

version: 15

}

opset_import {

domain: "ai.onnx.ml"

version: 15

}

視覺上看起來如下

子圖形由運算子 Scan 執行。在這種情況下,有一個 *scan* 輸入,表示運算子只會建立一個輸出。

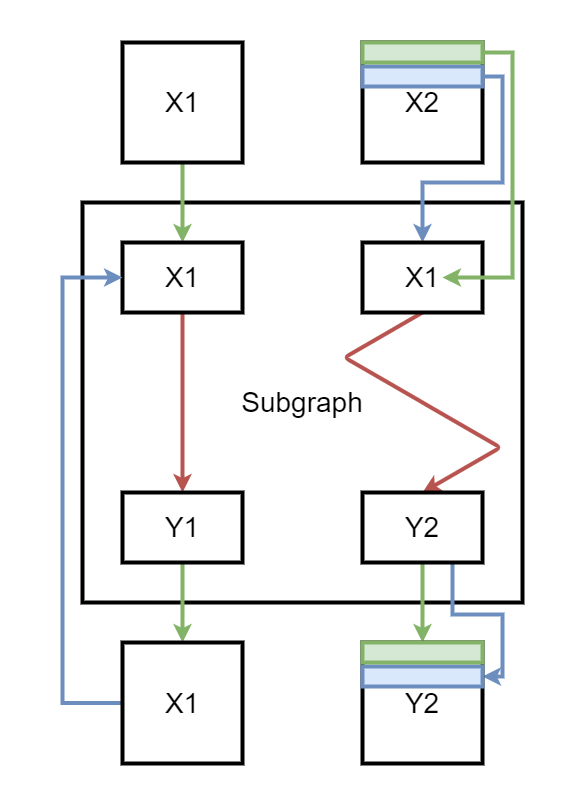

node = make_node(

'Scan', ['X1', 'X2'], ['Y1', 'Y2'],

name='Sc_Scan', body=graph, num_scan_inputs=1, domain='')

在第一次迭代中,子圖形會取得 *X1* 和 *X2* 的第一列。圖形會產生兩個輸出。第一個會在下一次迭代中取代 *X1*,第二個會儲存在容器中以形成 *Y2*。在第二次迭代中,子圖形的第二個輸入是 *X2* 的第二列。以下是簡短摘要。綠色是第一次迭代,藍色是第二次迭代。

函式¶

如上一章所述,函式可用於縮短建立模型的程式碼,並為執行預測的執行時間提供更多可能性,如果存在此函式的特定實作,速度會更快。如果沒有這種情況,執行時間仍然可以使用基於現有運算子的預設實作。

函式 make_function 用於定義函式。它的運作方式類似於具有較少類型的圖形。它更像是一個範本。此 API 可能會演變。它也不包含初始化器。

沒有屬性的函式¶

這是比較簡單的情況。函式的每個輸入都是在執行時間才得知的動態物件。

import numpy

from onnx import numpy_helper, TensorProto

from onnx.helper import (

make_model, make_node, set_model_props, make_tensor,

make_graph, make_tensor_value_info, make_opsetid,

make_function)

from onnx.checker import check_model

new_domain = 'custom'

opset_imports = [make_opsetid("", 14), make_opsetid(new_domain, 1)]

# Let's define a function for a linear regression

node1 = make_node('MatMul', ['X', 'A'], ['XA'])

node2 = make_node('Add', ['XA', 'B'], ['Y'])

linear_regression = make_function(

new_domain, # domain name

'LinearRegression', # function name

['X', 'A', 'B'], # input names

['Y'], # output names

[node1, node2], # nodes

opset_imports, # opsets

[]) # attribute names

# Let's use it in a graph.

X = make_tensor_value_info('X', TensorProto.FLOAT, [None, None])

A = make_tensor_value_info('A', TensorProto.FLOAT, [None, None])

B = make_tensor_value_info('B', TensorProto.FLOAT, [None, None])

Y = make_tensor_value_info('Y', TensorProto.FLOAT, [None])

graph = make_graph(

[make_node('LinearRegression', ['X', 'A', 'B'], ['Y1'], domain=new_domain),

make_node('Abs', ['Y1'], ['Y'])],

'example',

[X, A, B], [Y])

onnx_model = make_model(

graph, opset_imports=opset_imports,

functions=[linear_regression]) # functions to add)

check_model(onnx_model)

# the work is done, let's display it...

print(onnx_model)

ir_version: 11

graph {

node {

input: "X"

input: "A"

input: "B"

output: "Y1"

op_type: "LinearRegression"

domain: "custom"

}

node {

input: "Y1"

output: "Y"

op_type: "Abs"

}

name: "example"

input {

name: "X"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

input {

name: "A"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

input {

name: "B"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

output {

name: "Y"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

}

}

}

}

}

opset_import {

domain: ""

version: 14

}

opset_import {

domain: "custom"

version: 1

}

functions {

name: "LinearRegression"

input: "X"

input: "A"

input: "B"

output: "Y"

node {

input: "X"

input: "A"

output: "XA"

op_type: "MatMul"

}

node {

input: "XA"

input: "B"

output: "Y"

op_type: "Add"

}

opset_import {

domain: ""

version: 14

}

opset_import {

domain: "custom"

version: 1

}

domain: "custom"

}

具有屬性的函式¶

下列函式與先前的函式等效,但其中一個輸入 *B* 已轉換為名為 *bias* 的引數。程式碼幾乎相同,只是偏差現在是常數。在函式定義中,會建立一個節點 *Constant* 以插入引數作為結果。它會透過屬性 ref_attr_name 連結到引數。

import numpy

from onnx import numpy_helper, TensorProto, AttributeProto

from onnx.helper import (

make_model, make_node, set_model_props, make_tensor,

make_graph, make_tensor_value_info, make_opsetid,

make_function)

from onnx.checker import check_model

new_domain = 'custom'

opset_imports = [make_opsetid("", 14), make_opsetid(new_domain, 1)]

# Let's define a function for a linear regression

# The first step consists in creating a constant

# equal to the input parameter of the function.

cst = make_node('Constant', [], ['B'])

att = AttributeProto()

att.name = "value"

# This line indicates the value comes from the argument

# named 'bias' the function is given.

att.ref_attr_name = "bias"

att.type = AttributeProto.TENSOR

cst.attribute.append(att)

node1 = make_node('MatMul', ['X', 'A'], ['XA'])

node2 = make_node('Add', ['XA', 'B'], ['Y'])

linear_regression = make_function(

new_domain, # domain name

'LinearRegression', # function name

['X', 'A'], # input names

['Y'], # output names

[cst, node1, node2], # nodes

opset_imports, # opsets

["bias"]) # attribute names

# Let's use it in a graph.

X = make_tensor_value_info('X', TensorProto.FLOAT, [None, None])

A = make_tensor_value_info('A', TensorProto.FLOAT, [None, None])

B = make_tensor_value_info('B', TensorProto.FLOAT, [None, None])

Y = make_tensor_value_info('Y', TensorProto.FLOAT, [None])

graph = make_graph(

[make_node('LinearRegression', ['X', 'A'], ['Y1'], domain=new_domain,

# bias is now an argument of the function and is defined as a tensor

bias=make_tensor('former_B', TensorProto.FLOAT, [1], [0.67])),

make_node('Abs', ['Y1'], ['Y'])],

'example',

[X, A], [Y])

onnx_model = make_model(

graph, opset_imports=opset_imports,

functions=[linear_regression]) # functions to add)

check_model(onnx_model)

# the work is done, let's display it...

print(onnx_model)

ir_version: 11

graph {

node {

input: "X"

input: "A"

output: "Y1"

op_type: "LinearRegression"

attribute {

name: "bias"

t {

dims: 1

data_type: 1

float_data: 0.67

name: "former_B"

}

type: TENSOR

}

domain: "custom"

}

node {

input: "Y1"

output: "Y"

op_type: "Abs"

}

name: "example"

input {

name: "X"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

input {

name: "A"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

dim {

}

}

}

}

}

output {

name: "Y"

type {

tensor_type {

elem_type: 1

shape {

dim {

}

}

}

}

}

}

opset_import {

domain: ""

version: 14

}

opset_import {

domain: "custom"

version: 1

}

functions {

name: "LinearRegression"

input: "X"

input: "A"

output: "Y"

attribute: "bias"

node {

output: "B"

op_type: "Constant"

attribute {

name: "value"

type: TENSOR

ref_attr_name: "bias"

}

}

node {

input: "X"

input: "A"

output: "XA"

op_type: "MatMul"

}

node {

input: "XA"

input: "B"

output: "Y"

op_type: "Add"

}

opset_import {

domain: ""

version: 14

}

opset_import {

domain: "custom"

version: 1

}

domain: "custom"

}

剖析¶

模組 ONNX 提供了一種更快定義圖形的方式,而且更容易閱讀。當圖形在單一函式中建構時,很容易使用,但當圖形從許多不同的函式建構時,這些函式會轉換機器學習管線的每個部分,就會比較不容易使用。

import onnx.parser

from onnx.checker import check_model

input = '''

<

ir_version: 8,

opset_import: [ "" : 15]

>

agraph (float[I,J] X, float[I] A, float[I] B) => (float[I] Y) {

XA = MatMul(X, A)

Y = Add(XA, B)

}

'''

onnx_model = onnx.parser.parse_model(input)

check_model(onnx_model)

print(onnx_model)

ir_version: 8

graph {

node {

input: "X"

input: "A"

output: "XA"

op_type: "MatMul"

domain: ""

}

node {

input: "XA"

input: "B"

output: "Y"

op_type: "Add"

domain: ""

}

name: "agraph"

input {

name: "X"

type {

tensor_type {

elem_type: 1

shape {

dim {

dim_param: "I"

}

dim {

dim_param: "J"

}

}

}

}

}

input {

name: "A"

type {

tensor_type {

elem_type: 1

shape {

dim {

dim_param: "I"

}

}

}

}

}

input {

name: "B"

type {

tensor_type {

elem_type: 1

shape {

dim {

dim_param: "I"

}

}

}

}

}

output {

name: "Y"

type {

tensor_type {

elem_type: 1

shape {

dim {

dim_param: "I"

}

}

}

}

}

}

opset_import {

domain: ""

version: 15

}

這種方式用於建立小型模型,但在轉換程式庫中很少使用。

檢查器和形狀推斷¶

ONNX 提供一個函式來檢查模型是否有效。它會在可以偵測到不一致時檢查輸入類型或形狀。下列範例新增了兩個不同類型的矩陣,這是不允許的。

import onnx.parser

import onnx.checker

input = '''

<

ir_version: 8,

opset_import: [ "" : 15]

>

agraph (float[I,4] X, float[4,2] A, int[4] B) => (float[I] Y) {

XA = MatMul(X, A)

Y = Add(XA, B)

}

'''

try:

onnx_model = onnx.parser.parse_model(input)

onnx.checker.check_model(onnx_model)

except Exception as e:

print(e)

b'[ParseError at position (line: 6 column: 44)]\nError context: agraph (float[I,4] X, float[4,2] A, int[4] B) => (float[I] Y) {\nExpected character ) not found.'

由於不一致,check_model 引發錯誤。這適用於主要網域或 ML 網域中定義的所有運算子。對於任何未在任何規格中定義的自訂運算子,它會保持靜默。

形狀推斷具有一個目的:估計中間結果的形狀和類型。如果已知,執行時間可以預先估計記憶體消耗並最佳化計算。它可以融合某些運算子,它可以就地執行計算...

import onnx.parser

from onnx import helper, shape_inference

input = '''

<

ir_version: 8,

opset_import: [ "" : 15]

>

agraph (float[I,4] X, float[4,2] A, float[4] B) => (float[I] Y) {

XA = MatMul(X, A)

Y = Add(XA, B)

}

'''

onnx_model = onnx.parser.parse_model(input)

inferred_model = shape_inference.infer_shapes(onnx_model)

print(inferred_model)

ir_version: 8

graph {

node {

input: "X"

input: "A"

output: "XA"

op_type: "MatMul"

domain: ""

}

node {

input: "XA"

input: "B"

output: "Y"

op_type: "Add"

domain: ""

}

name: "agraph"

input {

name: "X"

type {

tensor_type {

elem_type: 1

shape {

dim {

dim_param: "I"

}

dim {

dim_value: 4

}

}

}

}

}

input {

name: "A"

type {

tensor_type {

elem_type: 1

shape {

dim {

dim_value: 4

}

dim {

dim_value: 2

}

}

}

}

}

input {

name: "B"

type {

tensor_type {

elem_type: 1

shape {

dim {

dim_value: 4

}

}

}

}

}

output {

name: "Y"

type {

tensor_type {

elem_type: 1

shape {

dim {

dim_param: "I"

}

}

}

}

}

value_info {

name: "XA"

type {

tensor_type {

elem_type: 1

shape {

dim {

dim_param: "I"

}

dim {

dim_value: 2

}

}

}

}

}

}

opset_import {

domain: ""

version: 15

}

有一個新的屬性 value_info,它儲存推斷的形狀。 dim_param: "I" 中的字母 I 可以視為變數。它取決於輸入,但函式能夠判斷哪個中間結果會共用相同的維度。形狀推斷並非所有情況都有效。例如,Reshape 運算子。只有在形狀恆定時,形狀推斷才有效。如果不是恆定,除非後續節點需要特定形狀,否則無法輕易推斷形狀。

評估和執行時間¶

ONNX 標準允許框架以 ONNX 格式匯出訓練的模型,並使用任何支援 ONNX 格式的後端啟用推斷。*onnxruntime* 是一個有效的選項。它可在許多平台上使用。它針對快速推斷進行了最佳化。其涵蓋範圍可在 ONNX 後端儀表板 上追蹤。*onnx* 實作了一個 Python 執行時間,有助於了解模型。它並非旨在用於生產環境,效能也不是目標。

線性迴歸的評估¶

完整的 API 在 onnx.reference 中說明。它會取得模型(*ModelProto*、檔案名稱,…)。方法 run 會傳回字典中指定的一組給定輸入的輸出。

import numpy

from onnx import numpy_helper, TensorProto

from onnx.helper import (

make_model, make_node, set_model_props, make_tensor,

make_graph, make_tensor_value_info)

from onnx.checker import check_model

from onnx.reference import ReferenceEvaluator

X = make_tensor_value_info('X', TensorProto.FLOAT, [None, None])

A = make_tensor_value_info('A', TensorProto.FLOAT, [None, None])

B = make_tensor_value_info('B', TensorProto.FLOAT, [None, None])

Y = make_tensor_value_info('Y', TensorProto.FLOAT, [None])

node1 = make_node('MatMul', ['X', 'A'], ['XA'])

node2 = make_node('Add', ['XA', 'B'], ['Y'])

graph = make_graph([node1, node2], 'lr', [X, A, B], [Y])

onnx_model = make_model(graph)

check_model(onnx_model)

sess = ReferenceEvaluator(onnx_model)

x = numpy.random.randn(4, 2).astype(numpy.float32)

a = numpy.random.randn(2, 1).astype(numpy.float32)

b = numpy.random.randn(1, 1).astype(numpy.float32)

feeds = {'X': x, 'A': a, 'B': b}

print(sess.run(None, feeds))

[array([[-0.9294482 ],

[ 2.5211418 ],

[ 0.86493903],

[ 1.2495515 ]], dtype=float32)]

節點的評估¶

評估器也可以評估簡單的節點,以檢查運算子在特定輸入上的行為方式。

import numpy

from onnx import numpy_helper, TensorProto

from onnx.helper import make_node

from onnx.reference import ReferenceEvaluator

node = make_node('EyeLike', ['X'], ['Y'])

sess = ReferenceEvaluator(node)

x = numpy.random.randn(4, 2).astype(numpy.float32)

feeds = {'X': x}

print(sess.run(None, feeds))

[array([[1., 0.],

[0., 1.],

[0., 0.],

[0., 0.]], dtype=float32)]

類似的程式碼也適用於 *GraphProto* 或 *FunctionProto*。

逐步評估¶

轉換程式庫會取得使用機器學習框架(*pytorch*、*scikit-learn*,…)訓練的現有模型,並將模型轉換為 ONNX 圖形。複雜的模型通常不會在第一次嘗試時就成功,而查看中間結果可能會有助於找出不正確轉換的部分。參數 verbose 會顯示有關中間結果的資訊。

import numpy

from onnx import numpy_helper, TensorProto

from onnx.helper import (

make_model, make_node, set_model_props, make_tensor,

make_graph, make_tensor_value_info)

from onnx.checker import check_model

from onnx.reference import ReferenceEvaluator

X = make_tensor_value_info('X', TensorProto.FLOAT, [None, None])

A = make_tensor_value_info('A', TensorProto.FLOAT, [None, None])

B = make_tensor_value_info('B', TensorProto.FLOAT, [None, None])

Y = make_tensor_value_info('Y', TensorProto.FLOAT, [None])

node1 = make_node('MatMul', ['X', 'A'], ['XA'])

node2 = make_node('Add', ['XA', 'B'], ['Y'])

graph = make_graph([node1, node2], 'lr', [X, A, B], [Y])

onnx_model = make_model(graph)

check_model(onnx_model)

for verbose in [1, 2, 3, 4]:

print()

print(f"------ verbose={verbose}")

print()

sess = ReferenceEvaluator(onnx_model, verbose=verbose)

x = numpy.random.randn(4, 2).astype(numpy.float32)

a = numpy.random.randn(2, 1).astype(numpy.float32)

b = numpy.random.randn(1, 1).astype(numpy.float32)

feeds = {'X': x, 'A': a, 'B': b}

print(sess.run(None, feeds))

------ verbose=1

[array([[ 0.73232394],

[-1.4866254 ],

[-1.0419984 ],

[-4.0337768 ]], dtype=float32)]

------ verbose=2

MatMul(X, A) -> XA

Add(XA, B) -> Y

[array([[-0.18954635],

[-0.45515418],

[ 1.9075701 ],

[ 0.9409449 ]], dtype=float32)]

------ verbose=3

+I X: float32:(4, 2) in [-2.260816812515259, 1.8377931118011475]

+I A: float32:(2, 1) in [-0.5139287710189819, 1.151671051979065]

+I B: float32:(1, 1) in [0.9994445443153381, 0.9994445443153381]

MatMul(X, A) -> XA

+ XA: float32:(4, 1) in [-2.9971811771392822, 2.6820805072784424]

Add(XA, B) -> Y

+ Y: float32:(4, 1) in [-1.9977366924285889, 3.6815249919891357]

[array([[-1.9977367 ],

[ 3.681525 ],

[ 0.30459452],

[ 2.5263572 ]], dtype=float32)]

------ verbose=4

+I X: float32:(4, 2):-0.55951988697052,0.21161867678165436,0.9477407336235046,-0.14677627384662628,-0.11711878329515457...

+I A: float32:(2, 1):[0.05029646307229996, -0.01414363645017147]

+I B: float32:(1, 1):[-0.6346255540847778]

MatMul(X, A) -> XA

+ XA: float32:(4, 1):[-0.03113492950797081, 0.049743957817554474, -0.015281014144420624, 0.041746459901332855]

Add(XA, B) -> Y

+ Y: float32:(4, 1):[-0.6657604575157166, -0.584881603717804, -0.649906575679779, -0.5928791165351868]

[array([[-0.66576046],

[-0.5848816 ],

[-0.6499066 ],

[-0.5928791 ]], dtype=float32)]

評估自訂節點¶

下列範例仍然實作線性迴歸,但會將單位矩陣新增至 *A*:\(Y = X(A + I) + B\)。

import numpy

from onnx import numpy_helper, TensorProto

from onnx.helper import (

make_model, make_node, set_model_props, make_tensor,

make_graph, make_tensor_value_info)

from onnx.checker import check_model

from onnx.reference import ReferenceEvaluator

X = make_tensor_value_info('X', TensorProto.FLOAT, [None, None])

A = make_tensor_value_info('A', TensorProto.FLOAT, [None, None])

B = make_tensor_value_info('B', TensorProto.FLOAT, [None, None])

Y = make_tensor_value_info('Y', TensorProto.FLOAT, [None])

node0 = make_node('EyeLike', ['A'], ['Eye'])

node1 = make_node('Add', ['A', 'Eye'], ['A1'])

node2 = make_node('MatMul', ['X', 'A1'], ['XA1'])

node3 = make_node('Add', ['XA1', 'B'], ['Y'])

graph = make_graph([node0, node1, node2, node3], 'lr', [X, A, B], [Y])

onnx_model = make_model(graph)

check_model(onnx_model)

with open("linear_regression.onnx", "wb") as f:

f.write(onnx_model.SerializeToString())

sess = ReferenceEvaluator(onnx_model, verbose=2)

x = numpy.random.randn(4, 2).astype(numpy.float32)

a = numpy.random.randn(2, 2).astype(numpy.float32) / 10

b = numpy.random.randn(1, 2).astype(numpy.float32)

feeds = {'X': x, 'A': a, 'B': b}

print(sess.run(None, feeds))

EyeLike(A) -> Eye

Add(A, Eye) -> A1

MatMul(X, A1) -> XA1

Add(XA1, B) -> Y

[array([[-1.5785826 , 0.04915774],

[-0.70039225, -0.11179054],

[-0.3988734 , -0.6035299 ],

[-0.11086661, -0.8977231 ]], dtype=float32)]

如果我們將運算子 *EyeLike* 和 *Add* 合併到 *AddEyeLike* 中使其更有效率,該怎麼辦?下一個範例會以網域 'optimized' 中的單一運算子取代這兩個運算子。

import numpy

from onnx import numpy_helper, TensorProto

from onnx.helper import (

make_model, make_node, set_model_props, make_tensor,

make_graph, make_tensor_value_info, make_opsetid)

from onnx.checker import check_model

X = make_tensor_value_info('X', TensorProto.FLOAT, [None, None])

A = make_tensor_value_info('A', TensorProto.FLOAT, [None, None])

B = make_tensor_value_info('B', TensorProto.FLOAT, [None, None])

Y = make_tensor_value_info('Y', TensorProto.FLOAT, [None])

node01 = make_node('AddEyeLike', ['A'], ['A1'], domain='optimized')

node2 = make_node('MatMul', ['X', 'A1'], ['XA1'])

node3 = make_node('Add', ['XA1', 'B'], ['Y'])

graph = make_graph([node01, node2, node3], 'lr', [X, A, B], [Y])

onnx_model = make_model(graph, opset_imports=[

make_opsetid('', 18), make_opsetid('optimized', 1)

])

check_model(onnx_model)

with open("linear_regression_improved.onnx", "wb") as f:

f.write(onnx_model.SerializeToString())

我們需要評估此模型是否等效於第一個模型。這需要此特定節點的實作。

import numpy

from onnx.reference import ReferenceEvaluator

from onnx.reference.op_run import OpRun

class AddEyeLike(OpRun):

op_domain = "optimized"

def _run(self, X, alpha=1.):

assert len(X.shape) == 2

assert X.shape[0] == X.shape[1]

X = X.copy()

ind = numpy.diag_indices(X.shape[0])

X[ind] += alpha

return (X,)

sess = ReferenceEvaluator("linear_regression_improved.onnx", verbose=2, new_ops=[AddEyeLike])

x = numpy.random.randn(4, 2).astype(numpy.float32)

a = numpy.random.randn(2, 2).astype(numpy.float32) / 10

b = numpy.random.randn(1, 2).astype(numpy.float32)

feeds = {'X': x, 'A': a, 'B': b}

print(sess.run(None, feeds))

# Let's check with the previous model.

sess0 = ReferenceEvaluator("linear_regression.onnx",)

sess1 = ReferenceEvaluator("linear_regression_improved.onnx", new_ops=[AddEyeLike])

y0 = sess0.run(None, feeds)[0]

y1 = sess1.run(None, feeds)[0]

print(y0)

print(y1)

print(f"difference: {numpy.abs(y0 - y1).max()}")

AddEyeLike(A) -> A1

MatMul(X, A1) -> XA1

Add(XA1, B) -> Y

[array([[ 0.410545 , 2.5168788],

[-0.5426773, 1.7367471],

[-1.2819328, 1.4263229],

[ 0.7035538, 1.5225948]], dtype=float32)]

[[ 0.410545 2.5168788]

[-0.5426773 1.7367471]

[-1.2819328 1.4263229]

[ 0.7035538 1.5225948]]

[[ 0.410545 2.5168788]

[-0.5426773 1.7367471]

[-1.2819328 1.4263229]

[ 0.7035538 1.5225948]]

difference: 0.0

預測相同。讓我們比較在夠大的矩陣上的效能,以觀察顯著差異。

import timeit

import numpy

from onnx.reference import ReferenceEvaluator

from onnx.reference.op_run import OpRun

class AddEyeLike(OpRun):

op_domain = "optimized"

def _run(self, X, alpha=1.):

assert len(X.shape) == 2

assert X.shape[0] == X.shape[1]

X = X.copy()

ind = numpy.diag_indices(X.shape[0])

X[ind] += alpha

return (X,)

sess = ReferenceEvaluator("linear_regression_improved.onnx", verbose=2, new_ops=[AddEyeLike])

x = numpy.random.randn(4, 100).astype(numpy.float32)

a = numpy.random.randn(100, 100).astype(numpy.float32) / 10

b = numpy.random.randn(1, 100).astype(numpy.float32)

feeds = {'X': x, 'A': a, 'B': b}

sess0 = ReferenceEvaluator("linear_regression.onnx")

sess1 = ReferenceEvaluator("linear_regression_improved.onnx", new_ops=[AddEyeLike])

y0 = sess0.run(None, feeds)[0]

y1 = sess1.run(None, feeds)[0]

print(f"difference: {numpy.abs(y0 - y1).max()}")

print(f"time with EyeLike+Add: {timeit.timeit(lambda: sess0.run(None, feeds), number=1000)}")

print(f"time with AddEyeLike: {timeit.timeit(lambda: sess1.run(None, feeds), number=1000)}")

difference: 0.0

time with EyeLike+Add: 0.09148920300003738

time with AddEyeLike: 0.07531165000000328

在這種情況下,似乎值得加入最佳化的節點。這種最佳化通常稱為 *融合*。兩個連續的運算子會融合為兩者的最佳化版本。生產通常依賴 *onnxruntime*,但由於最佳化使用基本的矩陣運算,因此它應該會在任何其他執行時間上帶來相同的效能提升。

實作詳細資訊¶

Python 和 C++¶

onnx 依賴 protobuf 來定義其類型。您可能會認為 Python 物件只是內部結構 C 指標的包裝。因此,應該可以從接收 ModelProto 類型 Python 物件的函式存取內部資料。但事實並非如此。根據 Protobuf 4 的變更,在版本 4 之後,這種做法已不再可行,更安全的做法是假設取得內容的唯一方法是將模型序列化為位元組,將其傳遞給 C 函式,然後將其反序列化。像是 check_model 或 shape_inference 等函式都會先呼叫 SerializeToString,然後呼叫 ParseFromString,再使用 C 程式碼檢查模型。

屬性與輸入¶

兩者之間有明顯的區別。輸入是動態的,每次執行時都可能改變。屬性則永遠不會改變,最佳化工具可以假設其永遠不變的情況下改進執行圖。因此,不可能將輸入轉換為屬性。而運算子 *Constant* 是唯一可以將屬性變為輸入的運算子。

具有形狀或沒有形狀¶

onnx 通常期望每個輸入或輸出都有形狀,並假設維度(或維度數量)是已知的。如果我們需要為每個維度建立有效的圖形,該怎麼辦?這種情況仍然令人費解。

import numpy

from onnx import numpy_helper, TensorProto, FunctionProto

from onnx.helper import (

make_model, make_node, set_model_props, make_tensor,

make_graph, make_tensor_value_info, make_opsetid,

make_function)

from onnx.checker import check_model

from onnxruntime import InferenceSession

def create_model(shapes):

new_domain = 'custom'

opset_imports = [make_opsetid("", 14), make_opsetid(new_domain, 1)]

node1 = make_node('MatMul', ['X', 'A'], ['XA'])

node2 = make_node('Add', ['XA', 'A'], ['Y'])

X = make_tensor_value_info('X', TensorProto.FLOAT, shapes['X'])

A = make_tensor_value_info('A', TensorProto.FLOAT, shapes['A'])

Y = make_tensor_value_info('Y', TensorProto.FLOAT, shapes['Y'])

graph = make_graph([node1, node2], 'example', [X, A], [Y])

onnx_model = make_model(graph, opset_imports=opset_imports)

# Let models runnable by onnxruntime with a released ir_version

onnx_model.ir_version = 8

return onnx_model

print("----------- case 1: 2D x 2D -> 2D")

onnx_model = create_model({'X': [None, None], 'A': [None, None], 'Y': [None, None]})

check_model(onnx_model)

sess = InferenceSession(onnx_model.SerializeToString(),

providers=["CPUExecutionProvider"])

res = sess.run(None, {

'X': numpy.random.randn(2, 2).astype(numpy.float32),

'A': numpy.random.randn(2, 2).astype(numpy.float32)})

print(res)

print("----------- case 2: 2D x 1D -> 1D")

onnx_model = create_model({'X': [None, None], 'A': [None], 'Y': [None]})

check_model(onnx_model)

sess = InferenceSession(onnx_model.SerializeToString(),

providers=["CPUExecutionProvider"])

res = sess.run(None, {

'X': numpy.random.randn(2, 2).astype(numpy.float32),

'A': numpy.random.randn(2).astype(numpy.float32)})

print(res)

print("----------- case 3: 2D x 0D -> 0D")

onnx_model = create_model({'X': [None, None], 'A': [], 'Y': []})

check_model(onnx_model)

try:

InferenceSession(onnx_model.SerializeToString(),

providers=["CPUExecutionProvider"])

except Exception as e:

print(e)

print("----------- case 4: 2D x None -> None")

onnx_model = create_model({'X': [None, None], 'A': None, 'Y': None})

try:

check_model(onnx_model)

except Exception as e:

print(type(e), e)

sess = InferenceSession(onnx_model.SerializeToString(),

providers=["CPUExecutionProvider"])

res = sess.run(None, {

'X': numpy.random.randn(2, 2).astype(numpy.float32),

'A': numpy.random.randn(2).astype(numpy.float32)})

print(res)

print("----------- end")

----------- case 1: 2D x 2D -> 2D

[array([[-2.4926333 , 1.1376997 ],

[-0.69576764, 0.54724586]], dtype=float32)]

----------- case 2: 2D x 1D -> 1D

[array([-0.38799438, 0.3706819 ], dtype=float32)]

----------- case 3: 2D x 0D -> 0D

[ONNXRuntimeError] : 1 : FAIL : Node () Op (MatMul) [ShapeInferenceError] Input tensors of wrong rank (0).

----------- case 4: 2D x None -> None

<class 'onnx.onnx_cpp2py_export.checker.ValidationError'> Field 'shape' of 'type' is required but missing.

[array([-0.17490679, 0.70208013], dtype=float32)]

----------- end